What is Data Learning and Why is it Important?

The top three technology disruptors of the past two decades have been mobile devices, social media, and cloud computing, all of which have since become ubiquitous. They play an essential part in our lives and have transformed the way we communicate and interact with digital content. At the heart of these trends is a tremendous amount of data, growing as the technology grows and resultantly influencing its usage. Big Data is changing the way businesses analyze and interpret data.

Many enterprises, excited about how Big Data could improve their bottom and/or top lines, quickly moved to collect massive amounts of data related to every aspect of their business, from marketing campaigns to manufacturing plants to customer 360 and detailed user interactions.

It wasn’t long before organizations realized that amassing Big Data and investing millions of dollars in their Hadoop or Spark clusters was not enough to push them ahead of their competitors. Many executives soon learned that their data was only “as valuable as the insight that it enabled,” and that insight was only valuable when “actionable.”

There are essentially two main obstacles here: humans and tools.

1. Why are humans a bottleneck?

Despite all the buzz surrounding machine learning, the best strategic decisions are ultimately made by experienced domain experts, who might not be all that comfortable diving into models and data. On the other hand, data scientists comfortable with Python and other computational frameworks might not have the domain expertise, and the really good ones are in such high demand that they are hard to come by. For this reason, many companies have dedicated data engineering or business intelligence teams whose main function is to perform such so-called data gymnastics, and to present data-driven insights to other business stakeholders. In general, finding individuals with both data and domain expertise remains a key bottleneck for many businesses.

2. Why are today’s data processing tools a bottleneck?

Despite significant progress over the past decade on tooling for Big Data, many organizations still struggle with sifting through large quantities of data efficiently.

In my numerous conversations with execs and data scientists in different industries, I’ve noticed three common situations in which data tools themselves are a bottleneck. Typically, these tools get in the way of “deriving actionable insight from data” when they are too slow, too manual, or too costly. More specifically:

Too Slow.

Real-time analytics is a myth (and a big marketing lie). Extreme patience (and time) is needed whenever you want to answer a question that requires analyzing a lot of data. Most of us know the frustration of waiting for a website that takes forever to load. Unfortunately, the same is true for almost any tool that has to crunch a lot of data. This has to do with laws of physics and hardware laws (stay tuned for a future blog post detailing this further, but for now, you can read my keynote from the ACM SIGMOD conference a few years ago.)

Even the fastest data warehouse on the market cannot join a hundred million rows or terabytes of data within a second or two. It’ll probably be more like 20 seconds, or even 20 minutes, depending on your data volume and query complexity.

But is 20 minutes that big of a deal, in the grand scheme of things? Simple answer: yes. Human attention spans are getting radically shorter. Several studies on human-computer interactions have shown that humans subconsciously filter their exploration ideas if their interactions with a computer exceeds a couple of seconds. Remember the last time you were staring at that loading icon for minutes on end, waiting for a dashboard to load? How tempted were you to try a more sophisticated query, or dig down even deeper? A lack of desire to dive deeper and get past high-level metrics can lead to missed opportunities or sometimes superficial conclusions.

Too Manual.

Sometimes, you need to know the answer before you can ask the right question. Sure, I may be exaggerating a little, but how else are you going to uncover the unknown unknown? In today’s data ecosystem, most tools are designed based on the implicit assumption that you will guide the tool (rather than the other way around).

For example, there are plenty of great BI tools that allow you to monitor various KPIs. But what if an important trend emerges in your underlying data without immediately affecting one of your KPIs? A trend like this might go unnoticed for quite a while, and by the time it is reflected in the KPIs and you take appropriate actions, it may have resulted in a considerable amount of lost (or unrealized) revenue. Even when a high-level KPI is affected, a large number of potential causes need to be manually inspected to separate the noise from the signal and arrive at the root cause.

Too Costly.

Sometimes we are forced to choose between spending a lot of money or spending a lot of money. While throwing more money at a problem might feel like a quick and easy fix, in many cases it is not economical, given the limited resources and numerous priorities of an organization.

That’s where most hosted analytics vendors make their fortunes — by offering a variety of tiered solutions to help you burn through your cash. I remember an interesting conversation I once had with a product leader at a cloud-based analytics vendor. I asked if their customers ever complained about dealing with slow queries, and the response was, “No , they know they can pay more to make them faster”. My follow up question, “But won’t it become too expensive?” was met with a proud declaration: “We have a perfect solution for that too: they can set a budget for their team and we will send them an automated alert once they exceed that budget!”

So what do customers of these hosted solutions do when they receive the notification that they’ve burned through their budget? Many data teams operate only on their data as of last night, as opposed to querying their latest data. Most of them don’t hate looking at their latest data, but they all hate spending all their departmental budget on their data warehousing bill.

So what is the solution? If you ask me, “Data Learning”

At the end of the day, you have to come to terms with the fact that it’s not possible to replace your domain experts with a fully automated machine learning algorithm. You need a team of experts to extract actionable insight from your data, but you also need to empower them with the right tools to get more done with less.

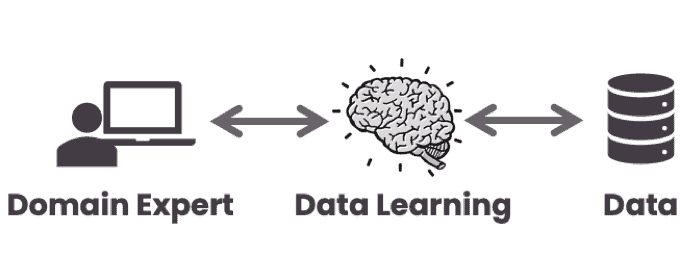

There should always be a layer between your data and your analysts, which will rely on state-of-the-art machine learning algorithms to learn your data and use those learned models to automate and accelerate the tedious aspects of the interactions between your experts and your data.

I call this paradigm “data learning”, the idea of letting humans and computers each focus on what they’re good at respectively.

A data learning solution should be able to help analysts with all three stages of obtaining actionable insight from their data:

- Detection: Models can capture the underlying distribution of the data, and understand it better than any single individual in the organization. The models can then be the first to detect when something is out of the ordinary. They can identify new trends, spot data quality issues, or uncover unexpected outliers, then run them by a domain expert to decide if further investigation is required.

- Analysis: Once a new investigation starts, the models can be used to accelerate the analytics itself. Instead of processing terabytes of raw data, in many cases invoking a few kilobytes of model parameters might go a long way toward reducing the time-to-insight, as well as considerably lowering the computational costs. This idea can be applied to both approximate and exact query processing. In a nutshell, this is all about “recycling compute cycles.” Once a computation is performed, it can be reused for future queries that share all or some of the same computation. Doing this at-scale, correctly, is far beyond what humans can manually do. However, a principled and automated inference process can determine when and how to reuse previous computations most efficiently.

- Explanation: Once the analysis is complete, the domain expert can rely on auto-generated models to quickly narrow down their search to a handful of directions. The goal is to focus on (in)validating the most promising hypotheses, instead of going through a tedious and haphazard process of looking everywhere and getting nowhere.

This idea, which started as an academic research project, quickly gained traction and was picked up by various companies in diverse industries, from tiny startups to Fortune 100 multinationals, and has evolved into a commercial-grade Data Learning platform, called Keebo. At Keebo, we have built a fully-automated platform that learns both lossy and lossless models, able to deliver both exact and approximate answers to analytical queries 10–200x faster without customers having to write a single line of code or requiring them to abandon their existing data warehouse or BI stack. Keebo’s primary design goal has been to ensure a seamless integration with the customer’s existing infrastructure, regardless of which BI tool (Looker, Tableau, etc.) or data warehouse/lake platform (Snowflake, Redshift, Presto, etc.) they use.

This past year and a half has been an extremely rewarding experience for us at Keebo. We’ve seen firsthand the night-and-day difference that Data Learning as a new technology has made for our customers. From accelerating their analytics, to reducing their compute and data warehousing costs, to automating their data engineering tasks, and improving their internal and external user experience, each customer has realized the value of Data Learning in their unique ways.

That said, I believe we have only scratched the surface when it comes to the full potential of Data Learning. Just as Machine Learning revolutionized the consumer experience, Data Learning will revolutionize how enterprises access, consume, visualize, and analyze their data.